Abstract

Short Answer finished its first full school year in classrooms in June of 2024. By year’s end, it reached over 50,000 monthly active teacher and students across all 50 states and 8 countries. The case study that follows is our effort to understand how those users experienced Short Answer over the course of the ‘23-’24 school year in the United States.

Employing a mixed-methods approach, this study integrates data from user interviews, net promoter score (NPS) surveys, and an end-of-year educator experience survey to assess Short Answer’s effectiveness. Key findings reveal that Short Answer fosters critical thinking and engagement by enabling student-centered feedback and promoting writing across disciplines. Despite its positive reception, evidenced by an average NPS of 82, challenges such as user interface issues and time constraints were noted. The study concludes with recommendations for further development of Short Answer to better meet educational needs, including enhancing user experience and incorporating more rigorous efficacy research.

Authors

- Emily Liu, master’s student at the Stanford Graduate School of Education

- Adam Sparks, cofounder of Short Answer

Methodology

This study draws from three sources: interviews with users, in-app net promoter score (NPS) surveys, and an end-of-year educator experience survey. We used a mixed-methods approach to more comprehensively understand Short Answer’s impact and address limitations in each individual data source. We believe this provides a holistic overview of Short Answer’s impact in the ‘23-’24 school year while also providing us with clear next steps in improving the Short Answer experience.

Interviews | 20 interviews were conducted by phone and video call in the spring of 2024 with ”Short Answer Ambassadors” – instructional coaches and teachers who voluntarily chose to take part in Short Answer’s ambassador program. These individuals could be described as “power users” — they not only use the product regularly, but have been compelled to share it with others by volunteering as ambassadors. Interviewees spanned geographic and socioeconomic areas across South Carolina, Texas, Illinois, and New Jersey.

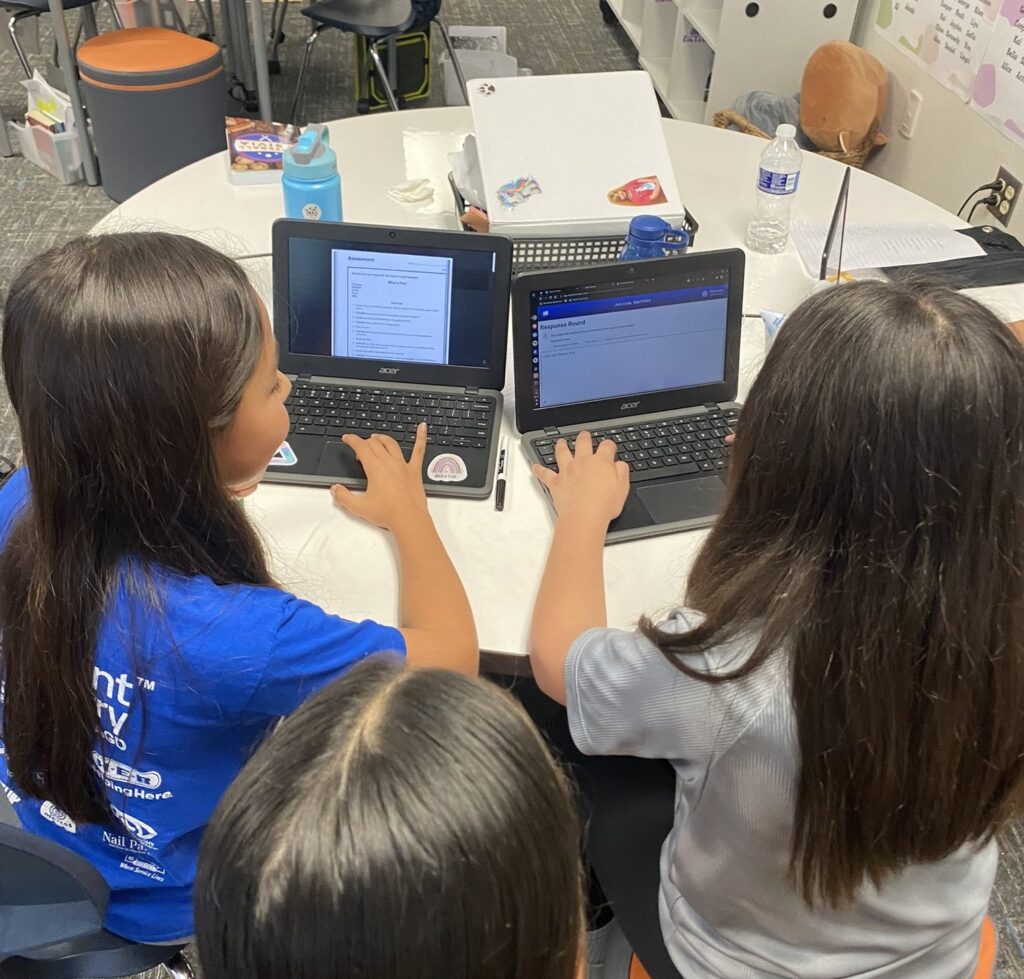

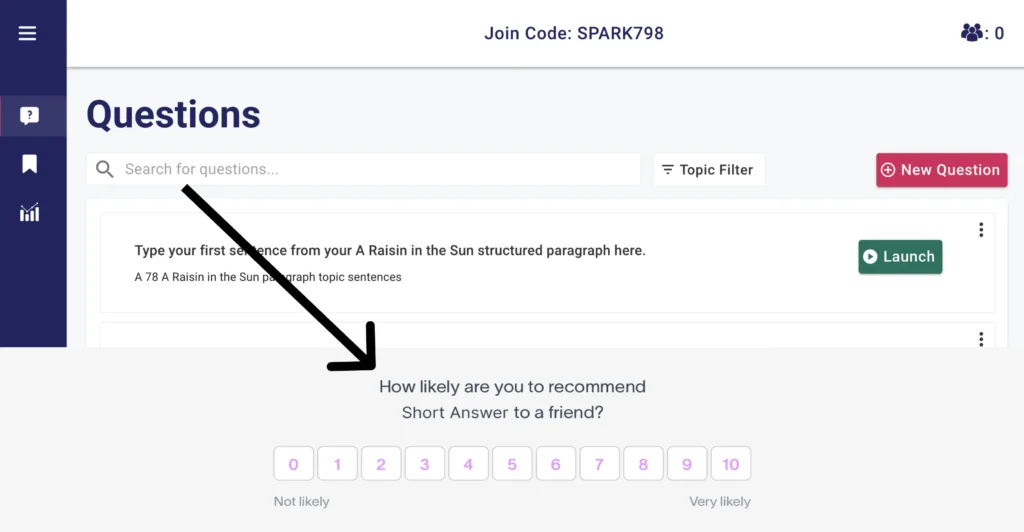

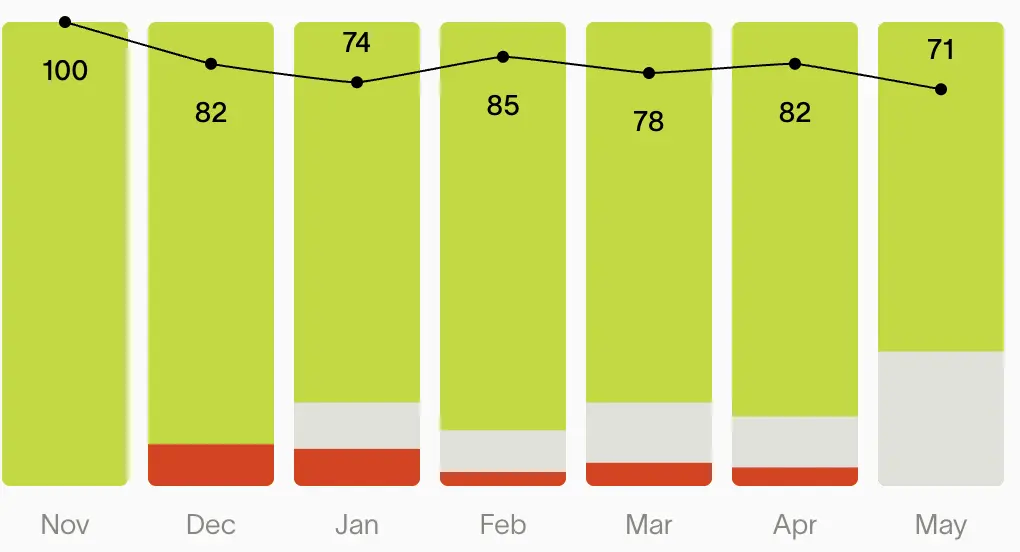

NPS Surveys | Short Answer embedded Qualtrics Delighted, a net promoter score survey, into the Short Answer teacher dashboard in November of 2023. The survey was displayed at random to all 9,000+ teachers who use the Short Answer platform. Teachers had the option to select how likely they were to share the tool with other educators (see image 1.0 below for reference). Teachers were prompted to leave an optional comment explaining the score when one was provided. Over the course of the ‘23-’24 school year, Short Answer collected 273 responses.

User survey | In May of 2024, an educator experience survey was emailed to the 9,000+ Short Answer educators who have an account on our platform. Of the 9,000+ who received the survey, 29 educators completed it by June 1, 2024. The survey focused on measuring educator perceptions of the learning gains resulting from Short Answer use. Questions focused on the learning outcomes defined in Short Answer’s Theory of Change.

Findings

Interviews

Several themes emerged from the 20 interviews. First, educators described how they saw Short Answer supporting critical thinking in student-centered ways, and as a form of “penalty-free practice.” Receiving peer feedback led students to realize, “Why didn’t I think of that?”, and “[put] that power in their hands to not only understand why they’re given that feedback, but to be able to use [it].” Further, as feedback givers, students were challenged to explain why they had chosen particular exemplars. This also gave educators an opportunity to point out how there could be a range of great, creative answers.

Educators spoke about how much they appreciated Short Answer’s ability to make the writing and feedback process engaging. As one educator pointed out, “Just getting kids to write a single sentence is challenging!” But because “they’re writing for their peers,” students cared about doing well, and they wanted to win. As one teacher put it, “the feedback from the kids is so much more impactful than anything I tell them. They really listen to their best friend who sits next to them.” This fun competition encouraged students to push their writing forward; in some classrooms they would beg to play Battle Royale — “the be-all and the end-all” — of their own accord. This was also a great help to educators because “the cool, the engaged [was] all taken care of,” allowing them “as the subject-matter expert . . . to spend time on the content.”

Educators also reported Short Answer’s strength in being both interdisciplinary and suitable for a range of activities. Instructional technology coaches in particular mentioned being attracted to Short Answer Teachers for writing across the curriculum initiatives. As examples, interviewees mentioned how they could use Short Answer not just for short constructed responses, but to “chunk” longer essays in ELA classes. Age groups from 3rd grade writing to AP English were mentioned, as were subjects ranging from discussing math errors to supporting evidence based claims in science. One teacher noted that “As the weeks have gone on, their answers have gotten so much more specific, so much stronger.”

Finally, educators discussed how Short Answer was able to serve many types of learners, meeting them each at their skill level: “It [gave] them all a place to improve.” Simultaneously, students who might be “hard on themselves” gained confidence through their peers’ positive and constructive feedback.

In discussing some perceived shortcomings of Short Answer, several educators mentioned confusion over the user interface (UI) and available functions of the platform, including a desire for stronger monitoring of student responses while students are writing and after they have submitted. Educators also mentioned the constraint of time. A high school social studies teacher from Chicago put it most directly: “We just didn’t have enough time for them to write, provide feedback, and reflect within one lesson. It just took way longer than expected.” Finally, several educators mentioned being unclear about the difference between the paid and premium versions of Short Answer.

All interviews that took place via video call were recorded, a portion of which can be viewed below.

NPS Survey Data

From November to June 2024, across 273 responses, Short Answer maintained an average net promoter score of 82 (see image below for monthly averages). When asked “How likely are you to recommend a Short Answer to a friend”, a score of 9 or 10 qualified as a promoter, or someone who was finding a lot of value in Short Answer. A score of 7-8 qualified as passive, or someone who was finding some value. A score of 6 and below qualified as detractors who were not finding value in Short Answer. Those different categories are represented by green, gray, and red in the chart below.

A total of 231 users indicated they would recommend Short Answer to a friend. 48 of those users chose to leave comments (see appendix). Trends that emerged from these 48 comments described increased student engagement and motivation, ease of use, educational value, and anonymity and the value of peer feedback. Users frequently mentioned student engagement the most. The competitive element and peer review were identified as key sources of engagement, falling in line with similar comments made in interviews and in the survey in the next section. For ease of use, many comments discussed the user-friendliness for both students and educators and described Short Answer as “easy to start using right away.” Short Answer was also praised for enhancing student learning through peer feedback and writing practice. Teachers felt it helped students develop their writing skills in a structured yet fun manner, making the learning process enjoyable, again falling in line with data from the interviews and educator experience survey.

A total of 30 users indicated that they were passive towards Short Answer. 8 of those users chose to leave comments (see appendix). These users generally found value in Short Answer, but with reservations. Common issues include needing more preparation time, the cost of the tool, and a desire for more features or flexibility. The expense of the paid version was a noted barrier, particularly for individual teachers. Users are also hoping for more customization options in the free plan such as increasing the number of criteria that can be used in assessments. There was also a desire for more activity types beyond comparisons of student responses.

A total of 12 users indicated they had negative experiences with Short Answer. 3 of those users chose to leave comments (see appendix). Technical issues and usability challenges made up the general themes here. Users point out technical problems such as a website crash and functionality issues (e.g. students being kicked out of games and unable to rejoin). They also felt there was a non-user-friendly interface at times. Users suggested improvements like adding features to enhance teacher control in the platform. They also raised concerns about changes made to the Basic/Free Plan that were launched in December of 2023.

Teacher Experience Survey

Results from our educator experience survey (n=29) came in across K12. 52% taught high school grade levels (9th-12th), 31% taught middle school (6th-8th), and 17% taught elementary (K-5th). Teachers primarily taught ELA (55.2%), followed by social studies (13.8%) and science (10.3%), although foreign language and technology design were also represented. Teachers primarily taught in Texas (31%) and Michigan (10.3%), with 14 states and 2 international locales represented in survey respondents.

44.8% of teachers reported using Short Answer occasionally (1-3 times a month), 31% frequently (1-3 times per week), 13.8% rarely (1-3 times per semester), 6.9% very frequently (1+ times per class) and 3.4% never (signed up but hadn’t used it yet).

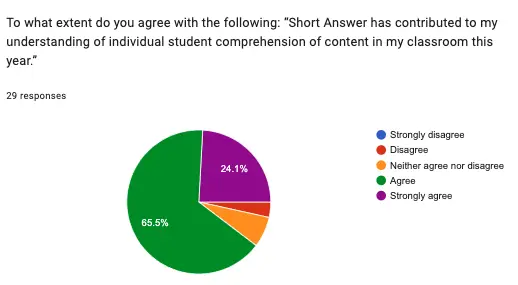

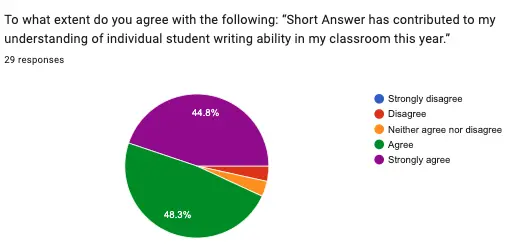

When asked the extent to which they agreed with the following: “Short Answer has contributed to my understanding of individual student comprehension of content in my classroom this year,” 89% either agreed or strongly agreed. 6.9% neither agreed nor disagreed, and 3.4% disagreed. When asked the extent to which they agreed with the following: “Short Answer has contributed to my understanding of individual student writing ability in my classroom this year,” 93% agreed, 3.4% neither agreed nor disagreed, and 3.4% disagreed (see charts below for this data).

Key themes from the open-ended survey questions following up on these questions reflected similar lines of thought from the aforementioned interviews and NPS surveys. Educators described Short Answer’s benefits as a formative assessment tool suitable for “impromptu writing” without being “punitive,” and which allowed students to see and point out errors “in real time” in a safe, anonymous context. This meant that students could provide “true constructive criticism without being mean and hurtful . . . [moving] into much higher levels of their thinking.” For many teachers, Short Answer also presented timely “snapshots” of student understanding, including misconceptions and recurring errors, that helped guide both subsequent class discussions and future lesson plans. Further, teachers reported using Short Answer across a range of topics, from poetry to grammar to standardized test practice.

Educators also pointed out areas for improvement. Some found that it was harder to use Short Answer in larger classes due to sessions taking longer times, or had questions about how the order of responses was determined. Other respondents mentioned their wish for a feature allowing students to award success criteria to multiple answers during the comparative judgment process.

Discussion

Limitations

The central limitation in the data presented is that educators who took part may have been more frequent users of Short Answer (i.e. they were self-selecting), especially considering the 20 interviewees were Short Answer Ambassadors. Thus, our results are more representative of this population than the entire user base. “Of the people who use Short Answer regularly, this is why they like and use Short Answer,” may be an accurate assessment of the results shared here. Student voices were also missing entirely from study data.

Several steps were taken to try to address these limitations. For example, the NPS and Teacher Experience surveys were attempts to survey a more representative sample of Short Answer users in comparison to the interview participants. Efforts were also made to understand teachers that had negative experiences with Short Answer; our team personally emailed all 12 of the individuals who scored Short Answer a 6 or lower on NPS surveys. Our emails and requests for (paid) interviews were either not responded to or declined. This experience mirrored the Teacher Experience survey, which was mailed to over 9,000 + educators and was responded to by 29 teachers. This was the case even with an incentive of a chance to win an Amazon gift card.

The low response rate on the survey and the lack of response to our emails likely owes to a simple fact: Teachers are extremely time-strapped. They often don’t have time or energy to dedicate to product efficacy studies and/or co-design efforts, even with incentives.

Outcomes

Even with the aforementioned limitations, the data speaks to Short Answer being a promising learning tool for writing instruction and formative assessment. The qualitative data illustrates that educators feel Short Answer is helping them achieve a variety of learning outcomes. Multiple data sources spoke to Short Answer supporting critical thinking, increasing engagement, and empowering students to take charge of their own learning.

The quantitative data illustrates that Short Answer is making a difference in multiple subjects and grade levels, and that the majority of respondents believed it has contributed to their increased understandings of both student comprehension and writing ability. Given that 93% of teachers reported that Short Answer gave them insight into student writing ability, it seems the tool is particularly well suited for writing instruction. In addition, an NPS score of 82 reflects a high level of satisfaction and positive experiences among educators, and indicates that the majority of educators were enthusiastic users.

Next Steps

While Short Answer is off to a strong start, there is room for improvement. Teachers need options to better control how long an activity takes and better monitoring options while students are constructing their response and providing feedback. While workarounds were mentioned by teachers, Short Answer needs to address these issues moving forward.

Another clear next step is to conduct more rigorous efficacy research, research that includes student voices and that collects data on student learning outcomes associated with the application. Short Answer has included student surveys in previous efficacy studies, but would like to conduct more rigorous studies. Our team continues to engage 3rd party research teams for these purposes. It is our hope that this more rigorous research can build on the case study presented here to improve Short Answer’s portfolio of efficacy over time.

Conclusion

We’re proud to learn how we have been helping to make writing and learning in K12 classrooms more engaging and meaningful. Just as importantly, we’re excited to continue to build and expand the platform to better serve the needs of educators and students. We can’t wait to see how teachers continue to use Short Answer to help students fall in love with writing.